The rapid evolution of sign language technologies is revolutionising accessibility for deaf and hard-of-hearing (DHH) communities, driven by interdisciplinary advancements in artificial intelligence (AI), computer vision (CV), and sensor-based systems. These innovations are dismantling communication barriers with unprecedented precision, yet they face significant technical and ethical challenges that must be addressed to ensure equitable adoption.

Core Technological Frameworks

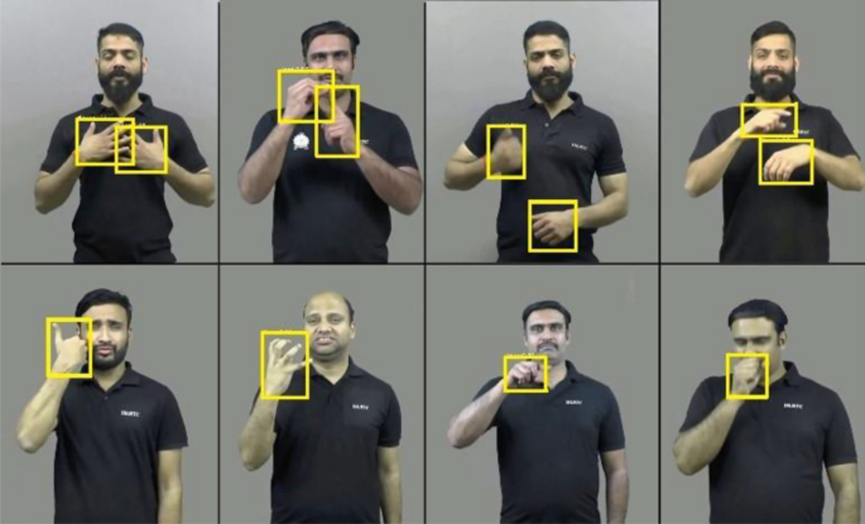

Sign language recognition (SLR) systems form the backbone of modern accessibility tools, converting manual and non-manual gestures into text or spoken language through two primary methodologies. Vision-based systems rely on cameras to capture the intricate kinematics of signing, including handshapes, facial expressions, and body posture. Pose estimation algorithms such as OpenPose and MediaPipe track skeletal keypoints—finger joints, wrist rotations, and facial landmarks—from RGB or depth camera feeds. While OpenPose achieves 95% accuracy in hand detection under controlled lighting, its performance degrades in occluded or low-light environments, highlighting the need for adaptive preprocessing techniques. Complementing these, 3D Convolutional Neural Networks (3D-CNNs) analyse spatio-temporal features in sign videos, capturing sequential movements with models like I3D, pre-trained on the Kinetics-400 dataset, achieving 78% accuracy on the WLASL corpus. However, the reliance on controlled datasets limits generalisability to real-world scenarios, where dialect variability and environmental noise introduce complexities.

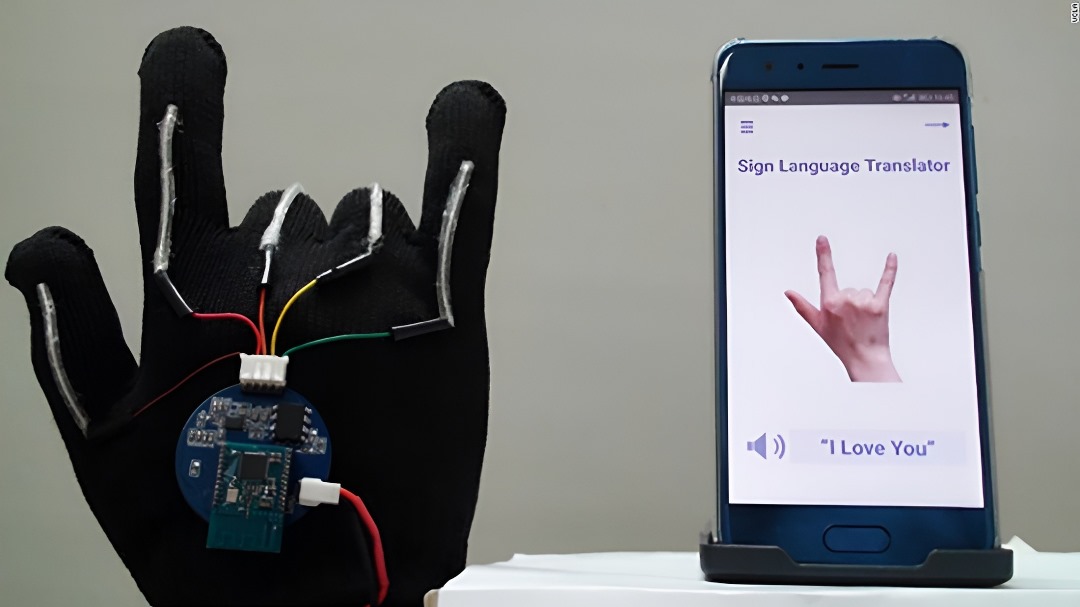

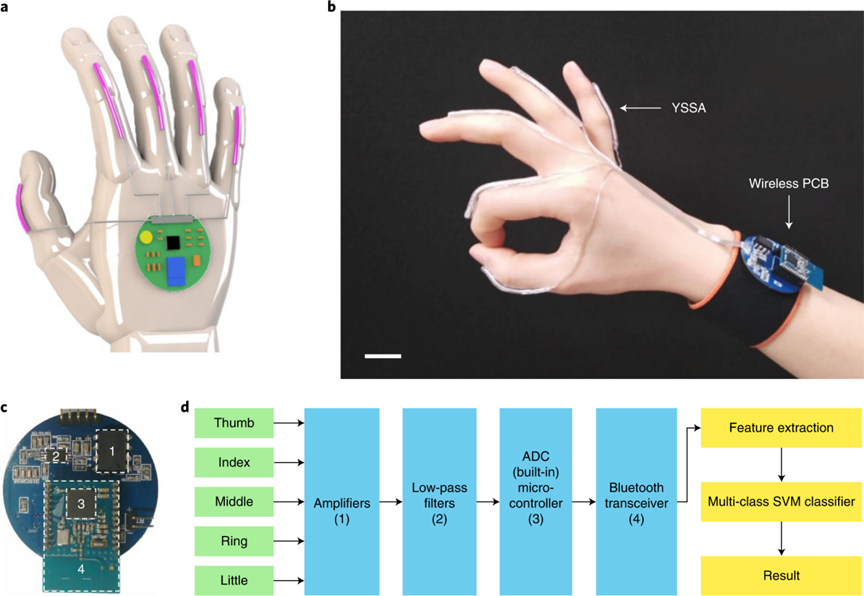

Sensor-based systems offer an alternative approach, leveraging wearable devices to enhance tracking precision. Instrumented gloves, such as the University of Washington’s SignAloud, integrate flex sensors and inertial measurement units (IMUs) to map finger bending and wrist rotation to a 250-sign vocabulary. Despite their technical sophistication, these gloves face adoption barriers due to discomfort and restricted mobility. Emerging solutions like myoelectric armbands, exemplified by Facebook’s Wristband prototype, detect muscle activity during signing, decoding nerve signals with 97% accuracy for discrete gestures. These contactless systems circumvent the physical constraints of gloves but struggle with continuous sign recognition, particularly for signs requiring intricate facial grammar.

Sign language translation (SLT) systems extend beyond recognition, bridging the grammatical and syntactic divides between sign languages and spoken texts. Modern SLT pipelines involve multi-stage processes, beginning with optical flow analysis to quantify motion vectors between video frames. The TV-L1 algorithm, for instance, improves motion boundary clarity by 20% over traditional methods, enabling precise isolation of signs. Spatial-temporal graph convolution networks (ST-GCNs) further model sign kinematics as graph nodes, achieving 82.3% accuracy on the MS-ASL dataset by representing joints as vertices and bone connections as edges. Semantic mapping then employs neural machine translation (NMT) architectures, with transformer-based models like SignBERT encoding sign videos into embeddings for subsequent decoding into text. The hybrid Sign2Gloss2Text approach, which first converts videos to glosses before translation, outperforms end-to-end models with a BLEU-4 score of 18.13, showing the value of intermediary semantic compression. Post-processing stages address depictive signs—non-lexical gestures mimicking object size or action—through rule-based adjustments informed by frameworks like AZee grammar, which formalises spatial references to reduce translation errors by 30%.

Emerging Innovations

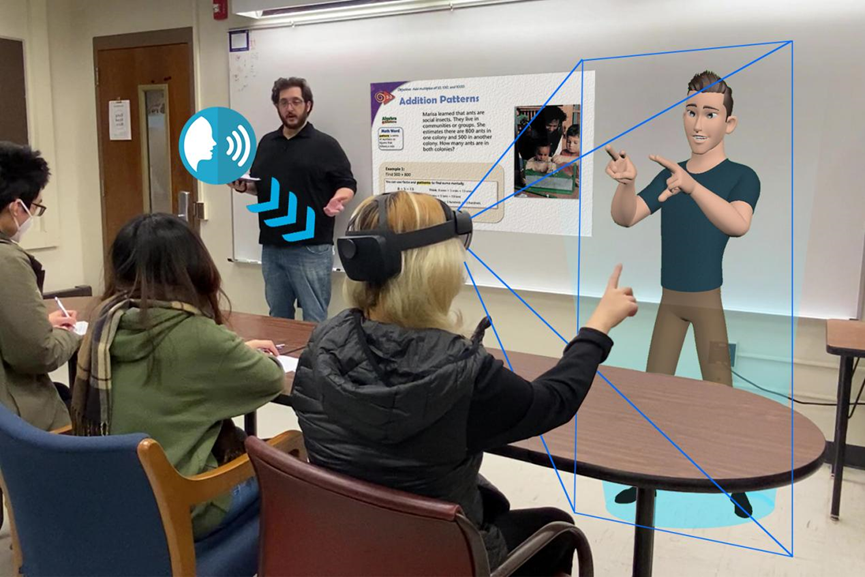

Augmented reality (AR) interfaces are redefining real-time communication by overlaying sign language interpretations onto physical environments. Microsoft’s HoloLens 2, for instance, projects photorealistic avatars that sign during medical consultations, reducing dependency on human interpreters. Pilot studies indicate 40% faster patient comprehension compared to text-based aids, though challenges persist in avatar naturalness and latency. Parallel advancements in smart glasses, such as Vuzix Blade Up, integrate bone conduction audio with live captions, aiding DHH users in noisy settings by delivering multimodal feedback.

Haptic feedback systems are similarly transformative, conveying sign language through tactile vibrations. The Teslasuit, a full-body haptic garment, maps sign phonemes to distinct vibration patterns, enabling deaf-blind individuals to perceive basic phrases with 85% accuracy in early trials. Companion devices like BuzzClip, a wristband that vibrates in sync with sign rhythms, assist children in language acquisition by reinforcing muscle memory. These technologies, however, remain prohibitively expensive for widespread deployment, particularly in low-resource regions.

Generative AI is pushing boundaries further through synthetic signers. Tools like SignGAN generate photorealistic avatars from text inputs, trained on datasets such as BBC’s BSL Zone. While these avatars promise scalability in educational and broadcasting contexts, ethical concerns around cultural misrepresentation and the uncanny valley effect necessitate community-led design. Meta’s Code Avatars, developed using reinforcement learning, mimic human coarticulation—the seamless transition between signs—achieving naturalness scores comparable to professional interpreters. Nevertheless, debates persist over whether synthetic signers should supplement or replace human roles, particularly in legal and healthcare settings where nuanced communication is critical.

Persistent Technical Hurdles

Data scarcity and annotation complexity remain formidable obstacles. The lack of standardised gloss annotation frameworks—exacerbated by variations in linguistic notation systems like HamNoSys—slows the creation of universal datasets. While corpora such as PHOENIX14T and How2Sign provide foundational resources, their focus on weather forecasts and lectures neglects colloquial or regional signs, introducing representational biases. Crowdsourcing platforms like SignWiki aim to diversify data through community contributions, but inconsistent annotation quality undermines model robustness.

Computational intensity further constrains real-world deployment. SLR models demand processing speeds exceeding 30 frames per second (FPS) for fluid interaction, a benchmark unattainable for many edge devices. Lightweight architectures like MobileSign, which employs a pruned ResNet-18 backbone, reduce latency to 15 milliseconds per frame, yet sacrifice accuracy for speed. Energy efficiency is equally critical; FPGA-based accelerators cut power consumption by 60% compared to GPUs, enabling prolonged use in wearable applications, but their high development costs hinder accessibility.

Cross-modal integration presents another frontier. Systems like Google’s MediaPipe Holistic combine SLR with speech recognition, allowing hearing users to engage in hybrid communication. However, synchronising auditory and visual modalities in noisy environments remains error-prone, often resulting in fragmented outputs.

Future Directions

Unified sign language embeddings, such as the proposed SignXL model, could enable universal translation by training on multiple sign languages simultaneously, reducing dependency on parallel corpora. Quantum machine learning, leveraging platforms like D-Wave, may optimise large-scale SLT models by solving NP-hard alignment problems in polynomial time, though this remains theoretical. Ethically, federated learning frameworks like SignFed empower DHH communities to co-train models on private data, preserving linguistic sovereignty while improving algorithmic fairness.

Conclusion

Sign language technologies stand at the precipice of transformative societal impact, yet their success hinges on addressing entrenched inequities in data, design, and accessibility. As Camgoz et al. (2020) assert, the future lies not in supplanting human interpreters but in augmenting their reach through inclusive, community-driven innovation. By harmonising cutting-edge AI with ethical governance, these tools can transcend niche applications, fostering a world where sign language is seamlessly integrated into the fabric of global communication.

Sources

Camgoz, N. C. et al. (2020). Sign Language Transformers: Joint End-to-End Sign Language Recognition and Translation. CVPR.

Sinha, A., Choi, C. and Ramani, K., 2016. Deephand: Robust hand pose estimation by completing a matrix imputed with deep features. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4150-4158).

Saad, A.M., Rabbani, G., Islam, M.M., Hasan, M.M., Ahamed, M.H., Abhi, S.H., Sarker, S.K., Das, P., Das, S.K., Ali, M.F. and Islam, M.R., A Scoping Review on Holoportation: Trends, Framework, and Future Scope. Framework, and Future Scope.

Antypas, K., Austin, V., Banes, D., Blakstad, M., Boot, F.H., Botelho, F., Burton, A., Calvo, I., Cook, A.M., Dasgupta, R. and Desideri, L., 2022. Global report on assistive technology.

Sayago, S., 2023. Cultures in human-computer interaction. Berlin: Springer.

Aloysius, N. and Geetha, M., 2020. Understanding vision-based continuous sign language recognition. Multimedia Tools and Applications, 79(31), pp.22177-22209.

Kudrinko, K., Flavin, E., Zhu, X. and Li, Q., 2020. Wearable sensor-based sign language recognition: A comprehensive review. IEEE Reviews in Biomedical Engineering, 14, pp.82-97.

Lee, B.G., Chong, T.W. and Chung, W.Y., 2020. Sensor fusion of motion-based sign language interpretation with deep learning. Sensors, 20(21), p.6256.

Chen, C. and Leitch, A., 2024. Ephemeral Myographic Motion: Repurposing the Myo Armband to Control Disposable Pneumatic Sculptures. arXiv preprint arXiv:2404.08065.

Hussain, J.S., Al-Khazzar, A. and Raheema, M.N., 2020. Recognition of new gestures using myo armband for myoelectric prosthetic applications. International Journal of Electrical & Computer Engineering (2088-8708), 10(6).

Yang, F.C., 2022. Holographic sign language interpreter: a user interaction study within mixed reality classroom (Master’s thesis, Purdue University).

Mathew, R., Mak, B. and Dannels, W., 2022, October. Access on demand: real-time, multi-modal accessibility for the deaf and hard-of-hearing based on augmented reality. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1-6).

Bai, H., Li, S. and Shepherd, R.F., 2021. Elastomeric haptic devices for virtual and augmented reality. Advanced Functional Materials, 31(39), p.2009364.

Choudhari, Y., Kudale, A. and Singh, C., 2025. Smart Chapeau for Visually Impaired. In Artificial Intelligence in Internet of Things (IoT): Key Digital Trends: Proceedings of 8th International Conference on Internet of Things and Connected Technologies (ICIoTCT 2023) (Vol. 1072, p. 13). Springer Nature.

Saunders, B., Camgoz, N.C. and Bowden, R., 2020. Everybody sign now: Translating spoken language to photo realistic sign language video. arXiv preprint arXiv:2011.09846.

Muschick, P., 2020. Learn2Sign: sign language recognition and translation using human keypoint estimation and transformer model (Master’s thesis).

Ma, J., Wang, W., Yang, Y. and Zheng, F., 2024. Ms2sl: multimodal spoken data-driven continuous sign language production. arXiv preprint arXiv:2407.12842.

Efthimiou, E., Fotinea, S.E., Hanke, T., Glauert, J., Bowden, R., Braffort, A., Collet, C., Maragos, P. and Lefebvre-Albaret, F., 2012. The dicta-sign wiki: Enabling web communication for the deaf. In Computers Helping People with Special Needs: 13th International Conference, ICCHP 2012, Linz, Austria, July 11-13, 2012, Proceedings, Part II 13 (pp. 205-212). Springer Berlin Heidelberg.

University of Washington, 2016. UW undergraduate team wins $10,000 Lemelson-MIT Student Prize for gloves that translate sign language. April 12, 2016.

Meta Reality Labs, 2021. Inside Facebook Reality Labs: Wrist-based interaction for the next computing platform. March 18, 2021.